Blurring Lines Between Machine and Biology

The original article can be found on Roboalliance.com. Roboalliance will be discontinued by its founding sponsor, Sharp, on July 2nd, 2018.

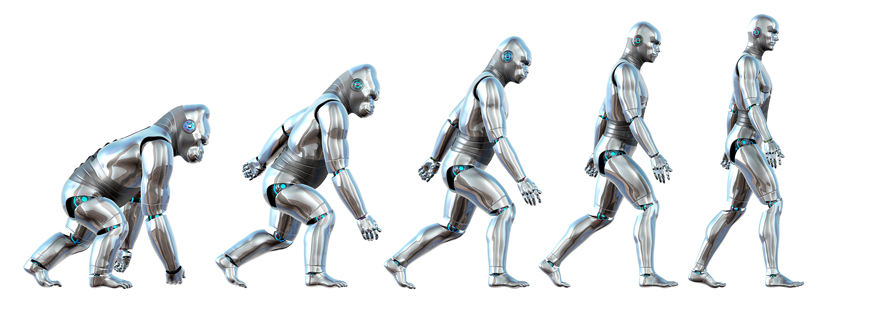

In the not too distant simple days of the past, the distinction between a user and his machines was clear. The user, a human, was the master, taking all the decisions, making all the observations and driving machines’ actions at great detail. Machines were essentially sophisticated hammers which would be nothing more than a useless pile of metal and plastic without their user.

Steady improvements in computing and sensing technologies coupled with algorithmic discoveries relating to decision making have transformed machines from passive entities to active partners to humans. With availability of more capable machines and the unrelenting aspiration to achieve ever higher levels of efficiency comes the unavoidable removal of humans from process flows. This shift is in fact not novel at all. Replacement of humans with other agents, may that be a machine and/or an animal, have always taken place to increase productivity. For instance, cows had replaced humans in ploughing farm land many thousands of years ago. An artificial intelligence software replacing the taxi driver today is just the most recent chapter in a long book. This being said, today humans are being pushed out of work flows at such an astounding speed, it presents a major social problem and is starting to cause a small panic. Discussions on the impending technological unemployment problem due to automation and proposed solutions such as Universal Basic Income are some of the downfall of this tectonic shift.

While conversations on the effects of automation on human societies assume mostly dystopian overtones, we at Robolit believe that this is a rather myopic perspective. It is true that the human society of today will cease to exist and most any change is scary, especially for the older crowd. However, the next phase in human evolution, where the historically clear boundary between user and machine will be ever so blurred, will be much more exciting and full of opportunities. In this article we will look into some examples from the global research community as well as some of Robolit projects that signal great potential in this new era.

To see the opportunities in this new era one must change their perspective in some fundamental manner. More specifically, we note that in this new paradigm neither the term user necessarily implies a human, nor a machine has to be an electromechanical system of some sort, nor a top-down decision hierarchy will remain. In contrast to the classical human machine interface literature, where a human is always assumed to be at the top of the decision totem driving collections of artificial systems, we observe this emerging era offers a much richer set of level partnerships between artificial and biological systems.

Historically, and even still today, humans surpass machines in higher level decision making in most any practical scenarios. For this very reason we are accustomed to having humans assume the decision making role. However, the steady rate of progress in decision making heuristics in general and artificial intelligence in particular suggests that in the near future computers will come on par with, and may be even exceed, their human counterparts in this area. Applications in our daily life such as natural language processing, autonomous driving and face recognition are just a few examples showing signs of this inevitable future.

This being said the fast paced developments in the information processing capabilities are not quite matched in other aspects of general machine design. Most notably physical capabilities such as dexterous interaction with objects or locomotion in broken and unstable natural settings are lacking in contemporary systems. Undoubtedly, machines will eventually achieve physical qualities that match biological systems. However, it is clear that the rate of improvement in physical capabilities is significantly lower than that of information processing capabilities. Consequently, we note that there will be a time period where artificial systems will provide high level of intelligence while lacking physical capabilities. We believe this is where the next family of killer applications.

There are numerous examples in the global research arena beautifully demonstrating the potential in proper integration of artificial and biological agents. For instance, work by David Eagleman demonstrates that the flexible nature of neural systems in humans can be taken advantage of to introduce new sensory capabilities that are not available in our nature. New perspective, faster access to information, deeper understanding of big data with assistive analysis software are just some of the new super powers this marriage between biology and technology will offer us humans.

Inspired by this observation, at Robolit, we identified a unique opportunity for a novel human machine partnership where computers take on high dimensional information processing and decision making tasks while humans perform physical tasks in the field as identified by computers. A specific example for this operational model is the Hotel Operation System (hOS) under development in partnership with Drita Hotel Resort & Spa. The core of this system is a data center that is tied to numerous information sources across the hotel grounds, automatic IoT collected as well as manually entered by human operators. A family of artificial intelligence software applications constantly process incoming data streams and produce decisions. In turn, these decisions are relayed to proper employees through their smart phone and/or tables to be physically executed. For instance, room check outs at the front desk directs housekeeping to clean the room and guest relations to contact the guest to apply the exit survey. Elimination of middle management provides significant cost reduction and improved speed and follow through improves user experience. In essence, the employees act as remote manipulators and sensors for the central computer and decision making software. This approach improve upon shortcomings of human decision making process while avoiding the need to develop robotic systems that are extremely costly at this moment in time.

Another novel example for machine/biology partnership can be observed in the developing cattle management system by the Vence Corp—another project Robolit has the opportunity to participate as a technology partner. In its core the Vence system aims to influence the swarm behavior of cattle herds in order to move animals across their pasture to achieve optimal grazing. While in its initial incarnation the Vence system is yet another master/slave type controlled biological system application, in the long term a Vence powered cattle will be a free range animal that is granted extra sensations and instinctual drives enabling it to find the best grass in its environment. Much like the hOS powered hotel employees or David Eagleman’s vibrating jacket wearer, the cattle of the future in the Vence architecture, a non-human biological user, will take advantage of new senses technological integration offers and be fed better and live more freely.

Our final example for advantageous partnership opportunities between biological and artificial systems is the Sharp’s Intellos automated unmanned ground vehicle (A-UGV). While at the first glance the Intellos system is a simple mobile security camera, that is indeed just the tip of the complete solution that is offered by Sharp. The essence of the Intellos system is based on the very same observations that the previous hOS and the Vence examples take advantage of, i.e., augmenting humans’ with powerful data gathering and decision making capabilities while taking advantage of humans where they still exceed their artificial counterparts. Allowing a single security person to be at multiple points at the same time and process tens of video streams simultaneously to identify what requires in person attention is the competitive advantage Intellos offers.

The convergence of various fields and the ubiquitous availability of computational power, communicational bandwidth and freely available algorithmic mechanism will open up new avenues for marrying biological systems with artificial capabilities. We like it or not the merging between us and machines has already began with the smart phones. It is important to identify the “good” partnerships that enrich our lives. Just like 90’s animated gif ridden bad web site designs, not all applications of a technological paradigm produces good products. It is our job as creators of the future to build the clean and functional marriages between nature and machine.

Convolution of UI Technologies in Robotics

The original article can be found on Roboalliance.com. Roboalliance will be discontinued by its founding sponsor, Sharp, on July 2nd, 2018.

Any given system, may that be a smart phone or a military drone, is a collection of heavily interconnected components where the system-level value proposition depends upon two fundamental factors:

- the quality of the individual components; and

- the quality of integration among them.

There is an important, but rather saddle, corollary to this statement which establishes a guiding rule in our R&D strategy at Robolit: when the underlying components present significant variation in their levels of technological maturity, the overall value expression of the system is primarily determined by the least mature technologies in the mix. While such maturity imbalance among system components is a problem, it also can serve as an invaluable guide for developers to identify areas of R&D that will have the highest return on investment.

To illustrate this observation, consider a simple unmanned drone and the flight duration as a system-level performance metric. Two fundamental ingredients in any drone system are the on-board processor and the battery. For decades there has been a non-stop geometric growth in processing power density. This rapid progress in the available processing power, however, offers very little positive change in system-level performance as measured by flight duration.

Here in this illustrative scenario exemplifying the above statement, it is the limitations of the battery technologies—component with the lower level of maturity— that dictate the overall system value. Conversely, virtually all leaps in UAV systems in the past 15 years have been fueled by improvements in the least mature components of their respective time period. For instance, the advent of rare earth magnet brushless motors with their significantly increased power density had led to the development and profusion of today’s ubiquitous electric quad-fliers.

As a member of the ASTM-NIST-DHS E54 Standard Test Methods community and RoboCup Rescue League organizing committee, the Robolit team enjoys the unique opportunity to observe, compare and contrast a wide range of search and rescue robot applications, both academic and commercial in origin. Analysis of data from task-oriented, eyes-off tests have led our team to the conclusion that a key shortcoming in today’s remotely operated system (RoS) design is their ineffective approach to user interfacing (UI). Following our guiding principle explained above, we strongly believe that the advent of several new control and virtual reality technologies present a unique opportunity to induce a paradigm shift in the UI design and lead to the next leap in RoS.

Before we go into more detail about our vision in UI design it would be helpful to first state the fundamental purpose of UI and then review the state-of-art UI mechanisms contrasting it against our developing design philosophy. We will partition this discussion in accordance with the two basic pathways any UI is responsible of:

- projection of the user’s intent to the remote system; and

- providing situational awareness to the user.

Projection of the user intent—the command path—aims to make the remote machine execute a task in the physical environment. Examples of user intent may be displacement of the entire vehicle to a new spatial position, or manipulation of an object in the work space, such as turning a door knob.

In today’s stereotypical design approach, the command pathway employs an input device, such as a joystick, whose input axes are statically assigned in a one-to-one manner to physical actuators in the remote system. For example, the forward push of a joystick drives the wheels in a UGV which in turn causes the vehicle to move forward, or pressing a button activates a servo motor to open the gripper at the end of a robotic arm. This static joint-by-joint control is a simple and acceptable solution for lower degree of freedom (DOF) systems. However, as the complexity of the system and/or the task increases the joint level control rapidly becomes unmanageable for human operators. For these systems, user fatigue is a major problem reducing both task execution speed and quality.

The key to improve command pathway lies in dimensional reduction in control construct. In essence, dimensional reduction allows the user to only specify the bare minimum commands to execute the task. This, in fact, is not a new concept. For instance, an old and established example of dimensional reduction in control is the end-effector control in robotic arms where the user dictates the position and orientation of the end-effector with respect to the body coordinate system but not the numerous joints along the arm connecting the end-effector to the body. Even though this control strategy is well-known, very few commercial or military system actually adopts it.

Yet, the end-effector control is just the tip of what dimensional reduction control construct can offer. In our design paradigm the control path assumes the form of an expanded set of “composable, assistive controllers,” each inducing a selectable task specific dimensional reduction. The static assignment of input device axes to actions is also abandoned for a more generic view. Mirroring the dynamic nature of on-demand composition of canonical assistive controllers, input device axes are also assigned varying roles accordingly.

Consider the task of opening a door by turning the door knob. Such an action requires a circular motion about the axis of the knob. Using end-effector control this task can be achieved. It will be significantly simpler than a joint level control system but it still requires the user to manually match and hold the knob axis with the end-effector rotation axis which in practice is rather tricky. In the essence, this very task only requires a single dimensional command input, the angle of rotation for the knob, or even simpler, on/off configuration of the knob. In our philosophy a door-knob-manipulation assistive controller would be one of the many selectable control components available for the operator. When activated, the operator first selects the knob, a depth sensor supported vision system approximates the axis of rotation of the knob of interest and configures the assistive controller with the captured parameters of the knob. From this point forward the only thing the operator needs to do is to use a generic purpose single degree of freedom (DOF) input device to issue a command that will be interpreted as the amount of knob rotation or its on/off state. With all the details of needing to control numerous geometric details of knob rotation eliminated from the operator attention the user fatigue is reduced to bare minimum.

It is clear that turning a door knob would be simpler when such a door-knob-manipulation assistive controller is employed. However, the real strength of our control paradigm stems from construction of complex behavioral controllers through dynamical composition of canonical assistive controller components. Continuing to built on the above scenario, task of opening a door not only requires turning the door knob but also pushing and/or pulling the door in a specific manner as dictated by the position and orientation of door hinges. Just like door knob manipulation, this sub-task, too, can be reduced to a one dimensional action requiring a single input. The two assistive control components working “concurrently” and accepting only two “task oriented” inputs would render the entire task as natural and straight-forward as any daily activity for the operator.

The situational awareness pathway in most present day commercial UI systems is as archaic as their mostly joint level control pathway. Typically, employing one or more screens, data gathered from RoS is projected on the display in multiple distinct video playback windows and gauge widgets. This 90’s approach in display design requires the operator to observe multiple feeds and “mentally” construct the situation. This extremely cumbersome architecture imposes a very expensive mental load on the operator, further reducing task execution speed and accuracy.

In contrast, we adopt a situational awareness pathway that is fundamentally based on virtual reality (VR) goggles and augmented reality (AR). The goal is to make the stream of information from the remote machine to the operator as streamlined and naturally parseable as possible. The key to rapid situational awareness is elimination of any need for the operator to do mental calculations.

Following this design approach a UI system under development at Robolit employs a VR goggle to virtually displace the operator to the physical position of RoS of interest.

Fundamentally, we use head tracking to naturally capture the operator’s observational intention. For instance, using natural head movements this system allows the operator to look at different directions from the perspective of the RoS cockpit. However, observational intention is not limited to gaze direction. Certain head movements, duration of a gaze and many other extracted parameters provide clues that would guide what is presented to the user. Contrast this approach to the use of a joystick direct onboard cameras, buttons to select widgets and multiple disjointed playback windows. The simplicity and speed of our construct will be clear.

There is a duality between command and awareness pathways in our paradigm. While the use of VR goggles provide a significant advantage, the real value in our approach stems from the composition of multiple informational sources into a monolithic video stream through the use of augmented reality.

In this brief article we provided a very high level glimpse to our roadmap for an integrated UI architecture that aims to minimize user fatigue through the elimination of mental computations. We strongly believe that this can be achieve by proper utilization of on-demand assistive controllers with extreme dimensional reduction in command pathway, and VR goggles employing state parameterized augmented reality. Our early prototypes present very encouraging results. We are looking forward to bringing these ideas into end-users with value-added partners.